GenAI Project OPEA Marks 1.0 Release

As the Open Platform for Enterprise AI (OPEA) hits 1.0 we look at recent highlights and what’s next for the project designed to simplify enterprise GenAI app development

Generative AI (GenAI) solutions can provide significant gains for businesses. For example, tools like ChatGPT and Copilot have automated content creation from prose to code, increasing productivity across roles. The outcomes that can be achieved through GenAI deployments, as with most new technology, have created excitement and innovation, and shed light on the lack of structure, best practices, and standardization within the field. While enterprises want to embrace GenAI, they’re finding that it isn’t quite ready for them in terms of security, features, consistent performance, scalability, and resiliency.

The Missing Puzzle Pieces

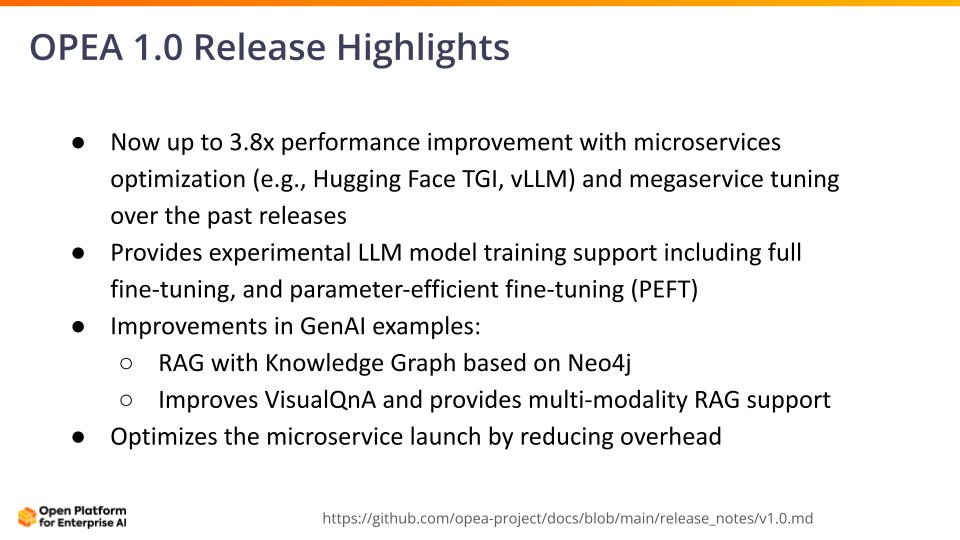

Open Platform for Enterprise AI (OPEA) continues to keep its promise “to take GenAI to the next level.” With the recent release of version 1.0, the OPEA project provides a framework to contextualize the role that GenAI can play in advancing enterprise products and improving business outcomes, from amping up productivity to personalizing interaction with customers. This is illustrated via 16 end-to-end examples (or megaservices) of how GenAI can help solve specific enterprise needs with a focus on retrieval augmented generation (RAG) pipelines.

GenAI Composable Modules

To date, OPEA has 16 examples made up of 15 composable microservices that simplify development, as well as keep enterprise-level concerns like security, resiliency, and observability front and center to ensure responsible deployment in a complex ecosystem. Each microservice is modular, so users can easily swap in what they need. A recent contribution from Prediction Guard, addresses prompt injection attacks and toxicity in AI output. (See the GenAIComps repo)

“OPEA is a game-changer for businesses looking to integrate generative AI workflows securely, efficiently, and at scale. At Prediction Guard, we believe in the power of open platforms to accelerate innovation and drive business value. By leveraging OPEA’s composable building blocks and industry-standard best practices, our customers can build cutting-edge generative AI solutions faster than ever before. Our involvement with OPEA underscores our commitment to fostering an open, collaborative ecosystem that empowers businesses to realize the full potential of AI.”

-Daniel Whitenack, Founder and CEO, Prediction Guard

Cloud Native GenAI Enablement

Given enterprise scale needs and the value of cloud for elastic resources and usage-based pricing, OPEA addresses GenAI with a cloud-first mindset. All OPEA micro-components and sample GenAI pipelines are cloud native, as in containerized, deployable in Docker and Kubernetes environments, and runnable on-prem or in a cloud service provider (CSP) of your choice. The OPEA metrics collectors and triggers facilitate scaling up and down services to meet service level agreements (SLAs). OPEA embodies cloud native security best practices to ensure you can securely harness internal and external data sets. All of these assets are validated to work with the 16 examples available. (See the GenAIInfra repo)

Building on the new capabilities available in version 1.0, Canonical is in the process of incorporating Charmed Kubeflow capability, an MLOps platform, and their own Data Science Stack (DSS) solution (now generally available), which supports data scientists with their tasks on AI workstations. An article on the Canonical blog site states, “DSS is a command line interface-based tool that bundles Jupyter Notebooks, MLflow and frameworks like PyTorch and TensorFlow on top of an orchestration layer.”

The Right Solution for Your GenAI Needs

The ability to see metrics like throughput, latency, and accuracy is essential for the enterprise when comparing different hardware configurations for GenAI. We have a team currently focused on evaluation (see the GenAIEval repo) across the axes of performance, safety, bias, accuracy, and security.

All examples in OPEA 1.0 are fully compatible with Docker and Kubernetes, supporting hardware platforms such as Intel Gaudi and Intel Xeon, and NVIDIA GPU, that are running on-prem or in the cloud, ensuring interoperability, flexibility, and efficiency in GenAI adoption. More details of the monthly releases, including OPEA 1.0, are available here.

A Growing Community

The OPEA community currently incorporates 43 companies that have coalesced around the OPEA project, leaning into collectively addressing simplifying development, production, and adoption of GenAI solutions for the enterprise. Individuals from these companies are contributing in a number of ways:

- Members serving on the technical steering committee (TSC) include AMD, CDW, Comcast, Docker, Fidelity, Intel, JFrog, LlamaIndex, and Red Hat.

- OPEA working groups have members from Corsha, Datastrato, Qdrant, and Yellowbrick, to name a few

- Technical contributions underway from Bytedance, Canonical, FalkorDB, Neo4j, and Pathway.

OPEA has over 40 contributors from across the industry with new organizations joining every week.

Next Up for the OPEA Project

OPEA will not slow down! The focus, over the next six months, will be on expanding examples and the assets to enable them around agentic AI, multimodality, and model finetuning. The OPEA community through OPEA-based solutions will discover and address gaps and ease of use issues to make OPEA more full- featured and robust. Yes, “Powered by OPEA” is coming to you soon!

Join the Upcoming Events

One way to learn more about the OPEA project and community is through joining the monthly virtual events. In addition, be sure to look at the OPEA meeting calendar, which includes information on the technical steering committee and working group meetings, all open to the public.

OPEA Hackathon (September 30th through October 31st)

A fun-filled, month-long event – Hacktoberfest – helps participants contribute to open source GenAI at their own pace and build up the OPEA project. Catch the AMA Event taking place on October 16th to talk through some of the open issues and possible solutions.

GenAI Nightmares (October)

Enter if you dare…this won’t be your usual community event. This time we want to hear what horrors you have come up against in the GenAI world. Has your model drifted to Transylvania? Do you have as much privacy as Frankenstein’s monster in his lab? Are werewolves plaguing your security? We want to hear about these terrifying tales. The GenAI Nightmares event will take place alongside the Hackathon closing ceremony.

OPEA Demo-palooza 2 (November)

This event is another opportunity to see OPEA in action, so you can do it yourself! Your creativity is the limit.

Documentation Sprint (December)

OPEA could use your help in improving documentation. Let’s hang out and try to tackle some of it to advance OPEA and make it more accessible for all.

Please join the OPEA mailing list to learn more about all the upcoming OPEA news and events. Better yet, join a working group and get hands-on shaping the future of enterprise AI.

Check Out These Useful Links

Getting Started: https://opea-project.github.io/latest/index.html

GitHub: https://github.com/opea-project

Docker Hub: https://hub.docker.com/u/opea

Project website: https://opea.dev/

About the Author

Rachel Roumeliotis, Director of Open Source Strategy at Intel

Rachel has been educating technologists for over 20 years. She comes to Intel most recently from O’Reilly Media, where she was a vice president of content strategy. During her time in the technical content acquisition and creation space, she worked within a wide variety of communities, from open source to AI, as well as security and design. She chaired many technical conferences, but her favorite was OSCON (O’Reilly’s Open Source Software Conference). She chaired it for five years before bringing O’Reilly’s conference program to the virtual world in the spring of 2020. She has also worked with companies like Google, IBM, and Microsoft to tell their stories to developers. She hails from Massachusetts and is a fan of ‘mystery box’ TV shows and sci-fi, horror, and fantasy books.

The Featured Blog Posts series highlights posts from partners and members of the All Things Open community leading up to ATO 2024.